Since AI is part of our everyday lives, it’s essential to discuss the relationship between AI and psychology. Can they work well together, or is it a case of human versus machine intelligence?

In an academic paper titled The Psychology of Artificial Intelligence: Analyzing Cognitive and Emotional Characteristics, Human-AI Interaction, and Ethical Considerations, author Beka Dadeshkeliani highlights that modern AI is becoming increasingly proficient at processing large amounts of data. It is also becoming adept at solving logical problems and recognising patterns. In other words, AI is becoming capable of performing many cognitive tasks that humans can.

But does this mean that AI can replace the human mind? In this post, we’ll unpack the intersection of psychology and AI, as well as some ethical and other issues we should all be aware of.

Can AI replace the human mind?

AI functions like the human brain because it mimics it through the development of computer processes, including the following:

- Human-centric UX, which designs interfaces according to input from user experience research

- Emotion AI, which utilises pattern recognition to classify emotional expressions in text and through facial recognition, can generate emotionally appropriate responses to human input (however, this is not based on true emotional understanding).

AI versus human psychology

Researchers like Dadeshkeliani confirm that AI thinking remains significantly different from human thinking. AI might respond or behave in a certain way, but it doesn’t feel emotions or process information in the same way humans do. So, when it comes to emotional intelligence and AI, AI doesn’t truly have EQ. In other words, AI systems simulate human-like responses based on patterns and data, but don’t have true consciousness.

Overall, while AI can automate some of the tasks we perform, it cannot replace the human mind. As it operates through data input and programming, it lacks the same access to holistic intelligence that humans possess. Therefore, AI remains limited, as it operates under strict constraints and parameters.

Why AI still has limits

As many experts agree, AI tools are only as good as the data we use to programme them. While AI can learn from data, it cannot feel empathy for another human being, even though it is natural for us to develop pseudo-intimate relationships with technology.

AI makes predictions based on statistical probabilities, but it isn’t always accurate, especially when input data is flawed or incomplete. AI language models operate by identifying patterns, meaning they don’t generate answers from a factual database, but give a prediction of a likely answer based on previous data input.

Therefore, generative AI models like ChatGPT can provide sophisticated answers, but there are many issues to consider, including the following:

- Bias: AI can inherit and reproduce harmful patterns in training data, e.g. racial, gender, cultural, or ideological inequalities.

- Hallucination: AI can generate information that sounds plausible but is entirely false, including fake citations, fake news, or non-existent statistics.

- Overconfidence: AI presents guesses as facts. As it’s trained to produce coherent and authoritative-sounding text, it won’t indicate that it might be wrong.

AI and ethical concerns

There are also some ethical issues to consider when building a relationship with technology, particularly in the context of mental health care. As Dadeshkeliani highlights:

“On the one hand, humans naturally project social behaviours onto technology, which can be harnessed to make AI systems more user-friendly and acceptable. On the other hand, a cautious approach is necessary, ensuring that users’ emotions and expectations regarding AI do not exceed the technology’s actual capabilities.”

AI in mental health care

When it comes to mental health care, we must be especially cautious about the complex relationship between humans and machines. A recent article by the BBC, called Can AI therapists really be an alternative to human help?” shares various public opinions on AI and highlights how it can be helpful in certain instances. For example, AI applications are affordable and accessible 24/7, allowing them to assist those on a waiting list to speak with a counsellor or therapist.

AI and the role of psychologists

AI mental health tools, such as therapy bots, mood trackers, and Cognitive Behavioural Therapy (CBT) apps like Wysa, can serve as an initial point of contact for individuals seeking support. Recent academic papers, such as “Enhancing Mental Health with Artificial Intelligence: Current Trends and Future Prospects,” confirm that rigorously tested mental health apps, like Woebot, have proven effective in clinical trials.

However, psychologists from the American Psychological Association (APA), in an article titled AI is changing every aspect of psychology, generally agree that AI-assisted therapy can’t replace a supportive therapeutic relationship.

So, while there is clearly a need for increased mental health support, current AI models often fail to meet ethical and empathetic standards, with widely publicised examples of enabling suicidal ideation or delusions. Nevertheless, AI can assist human therapists with various data-driven tasks, acting as a supportive tool rather than a replacement for human interaction in mental healthcare.

Some examples of supportive tools include symptom checkers, wearable fitness trackers, and mental health chatbots. These tools are convenient and can provide early detection and personalised advice. However, we need to be aware of the following issues:

- Misinformation or misdiagnosis from symptom checkers or chatbots trained on incomplete data

- Privacy risks from health trackers that collect sensitive biometric data

- Over-reliance on automated suggestions instead of professional care

At this point, it’s helpful to consider how AI affects the way our brains work, which can help us to work better alongside it.

How AI affects our brains

Overall, we must learn about the implications of the intersection between psychology and AI, especially in the context of the Fifth Industrial Revolution (5IR), which emphasises the use of technology in a human-centric manner.

A Psychology Today article called The Psychology of AI’s Impact on Human Cognition by Cornelia C. Walther provides some helpful commentary on AI’s cognitive effects and how it impacts our psychological functioning, which includes the following key considerations:

AI and personalised content

The algorithm personalises all the content we consume, which means it might limit our consumption to specific information, a phenomenon known as “preference crystallisation”. We must also consider issues such as data privacy when receiving personalised content recommendations.

AI and emotional content

As Cornelia C. Walther shares, AI systems, for example, those used in social media, are designed to capture and maintain attention. To achieve this, these systems leverage our brain’s reward system by delivering emotionally charged content, based on feelings such as outrage, joy, and anxiety.

However, if we are constantly consuming personalised and emotionally charged content, we can miss out on authentic emotional experiences (which teach us how to function in the world).

AI and critical thinking

As mentioned, AI reflects the biases and values embedded in its training data and design.

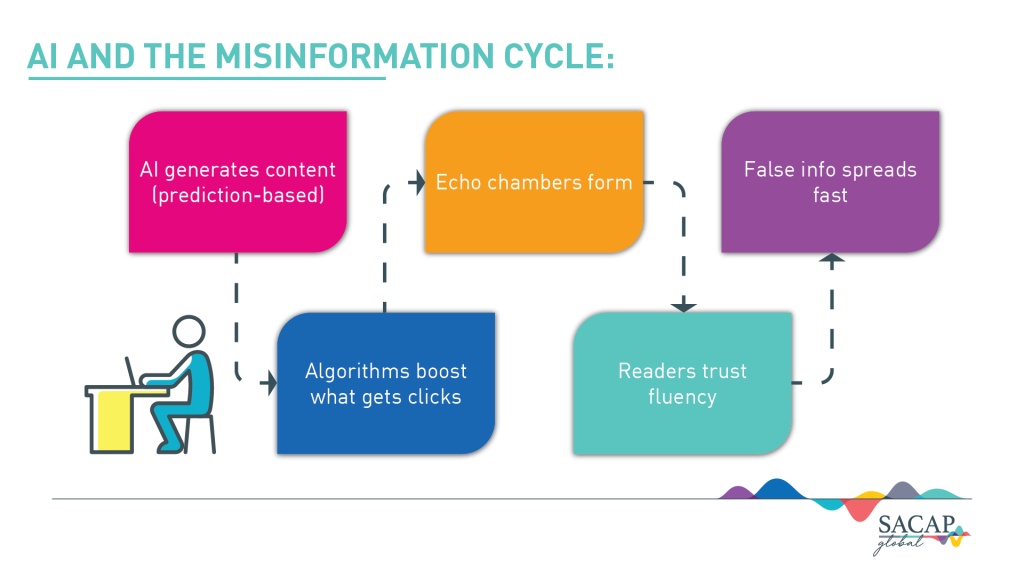

As generative AI curates content, it can also create what are known as “echo chambers”, which means we only see content that “echoes” our current viewpoints. We must be aware of how this bias affects our critical thinking, as it can lead to what researchers call “confirmation bias amplification”, which means we stop thinking for ourselves.

The problem with not thinking critically is that we can unintentionally spread misinformation, which the image below explains:

AI, screen time and mental health

In an age of AI, where we spend a lot of time staring at a screen and not engaging with the physical world, we can experience what psychologists call “nature deficit” or “embodied disconnect”. This disconnect affects how and if we notice, pay attention to and process our emotions.

An awareness of paying attention and processing our emotions is especially relevant in an educational context. Teachers should be well-versed in the potential downsides of using AI and promote a balance between using technology and preserving what psychologists call “holistic psychological functioning”.

How to balance human versus machine intelligence

As we’ve touched on above, AI can be helpful, for example, in assisting with mental health care. Anyone who uses generative AI can also confirm that it is beneficial for research and communication purposes. Therefore, the debate between humans and machines doesn’t have to swing either way. We can strike a balance between leveraging technology to assist us and maintaining the uniqueness of our human abilities.

Tips for using AI responsibly

As Cornelia C. Walther’s commentary shares, there are many ways to balance the potential impact of using AI tools, including the following:

- Understanding how AI works and becoming more aware of its influence

- Seeking out diverse perspectives and challenging assumptions to avoid being overly influenced by algorithmic bias

- Exercising, practising mindfulness and spending time in nature can help us stay connected to our bodies and our innate human intelligence.

The future of AI and psychology

While we need to consider the psychology behind AI, particularly in education, social media, and mental health tools, it’s possible to utilise AI tools in a balanced and beneficial way.

As we’ve mentioned, we should balance the use of technology with physical and mental wellbeing. We should also be aware of issues such as bias and data privacy, as well as the risks associated with relying too heavily on technology for the emotional support that only humans can provide.

To use AI more responsibly and develop more ethical AI tools and frameworks, it’s vital to gain a deeper understanding of how AI works, both in general and in the context of educational psychology and mental health. To help you upskill in these areas, SACAP Global offers three online courses in the ethical, professional and responsible use of AI.

These courses include the following:

- Using AI Tools in Everyday Life

- Navigating the Impact of AI in the Field of Mental Health

- AI in Education: Ethics and Innovation

FAQ:

1. Can AI understand how humans think or feel?

No, AI does not possess true emotional intelligence, although it can respond in an emotionally appropriate way to queries via machine learning and pattern recognition.

2. Will AI replace therapists or psychologists?

Through machine learning and pattern recognition, AI can respond in what appears to be an emotionally intelligent way, but we can’t confirm that AI possesses true EQ. Therefore, we still need therapists and psychologists to maintain ethical and effective therapeutic relationships.

3. How is AI currently used in therapy or mental health care?

We can use AI in various ways for therapy and mental health care, including data-driven tasks, providing information, and serving as an initial point of contact for those seeking mental health support through chatbots and apps. Some apps may also help reduce symptoms.

4. What are the risks of relying on AI for emotional support?

While it’s natural for human beings to assign human qualities to technology, AI cannot truly empathise. It can provide programmed responses that might appear to meet specific emotional needs, but it cannot replace a truly supportive relationship in the long term. Therefore, the risks include a lack of proper support and a distortion of what healthy relationships should be like.

5. What role does psychology play in designing AI tools?

Psychology plays a vital role in designing AI tools, as it contributes to building sound ethical frameworks and designing AI that works effectively without impairing inherent psychological functioning.

6. How does AI impact student mental health and learning?

AI can impact mental health by affecting our cognitive processes, such as attention regulation and emotional processing. To ensure optimal mental health and learning, students must learn how to use AI in an ethical, effective, and balanced manner.